As a new and self-taught programmer, complicated syntax has never been my friend. Python was one of the first languages I tried, and I loved it immediately for its readable, simple syntax. It felt almost like writing English. When I stumbled upon Mojo, it seemed like the best of both worlds, combining Python’s straightforward syntax with the performance of compiled languages like Rust and C. Still, I looked for a way to speed up my learning process so that I could focus less on syntax and more on building.

Not long after discovering Mojo, I came across a LinkedIn post about Cursor, an AI-powered code editor that was built as a fork of Visual Studio Code. I was blown away by how fast I could build when I wasn’t constrained by my lack of syntactic mastery, or by going back and forth between a code editor and the browser to use ChatGPT.

Was the generated code perfect? No, but it was a great starting point for me to iterate on, and Cursor fundamentally changed the way that I learn new programming languages. This paradigm shift isn’t unique to Cursor; GitHub Copilot and other AI-powered IDE plugins have changed the way that people program and learn to program. I was excited to use Cursor to write Mojo, but I quickly realized that doing so would be difficult – because Mojo is a new, still-evolving language, most large language models struggle to write correct Mojo that will compile and run using the latest release.

Instead of giving up and writing Mojo the old-fashioned way, I decided to take on this challenge as a learning experience. My mission was simple: help AI write better Mojo.

My first attempt at using AI to write Mojo

The first time I tried to write Mojo with Cursor, I used one of their default models: Anthropic’s Claude 3.5 Sonnet. This model’s training data cuts off in April 2024, meaning that there are five months of Mojo updates missing from its training set. To test the model’s Mojo-writing abilities, I gave Cursor a simple task: “Write a Mojo script to output the sum of two values”:

There were two things I noticed about the generated snippet:

- It used

letstatements. Early in its evolution, Mojo supported bothletandvarstatements. At one point in time, this would have been correct Mojo, but support forletstatements was removed earlier this year. - Although every Mojo program must include a function named

main()as its entry point, the code in themain()function will run without explicitly callingmain(), so the last line of the generated snippet is unnecessary.

Overall, the performance in this case was okay, but not great. If I were grading Cursor and Sonnet’s Mojo writing skills, I would have graded it a C at this point.

Turning to ChatGPT for advice

I needed to improve Cursor’s Mojo writing abilities, and the internet is full of recommended techniques to make AI more accurate – from RAG (and graph RAG), to fine-tuning, to prompt engineering. I was overwhelmed by the choices, so I turned to ChatGPT for advice, feeding it an example of the semi-correct Mojo code generated and asking how I could get better performance. ChatGPT’s recommendation? Fine-tune a model using data specific to the Mojo standard library.

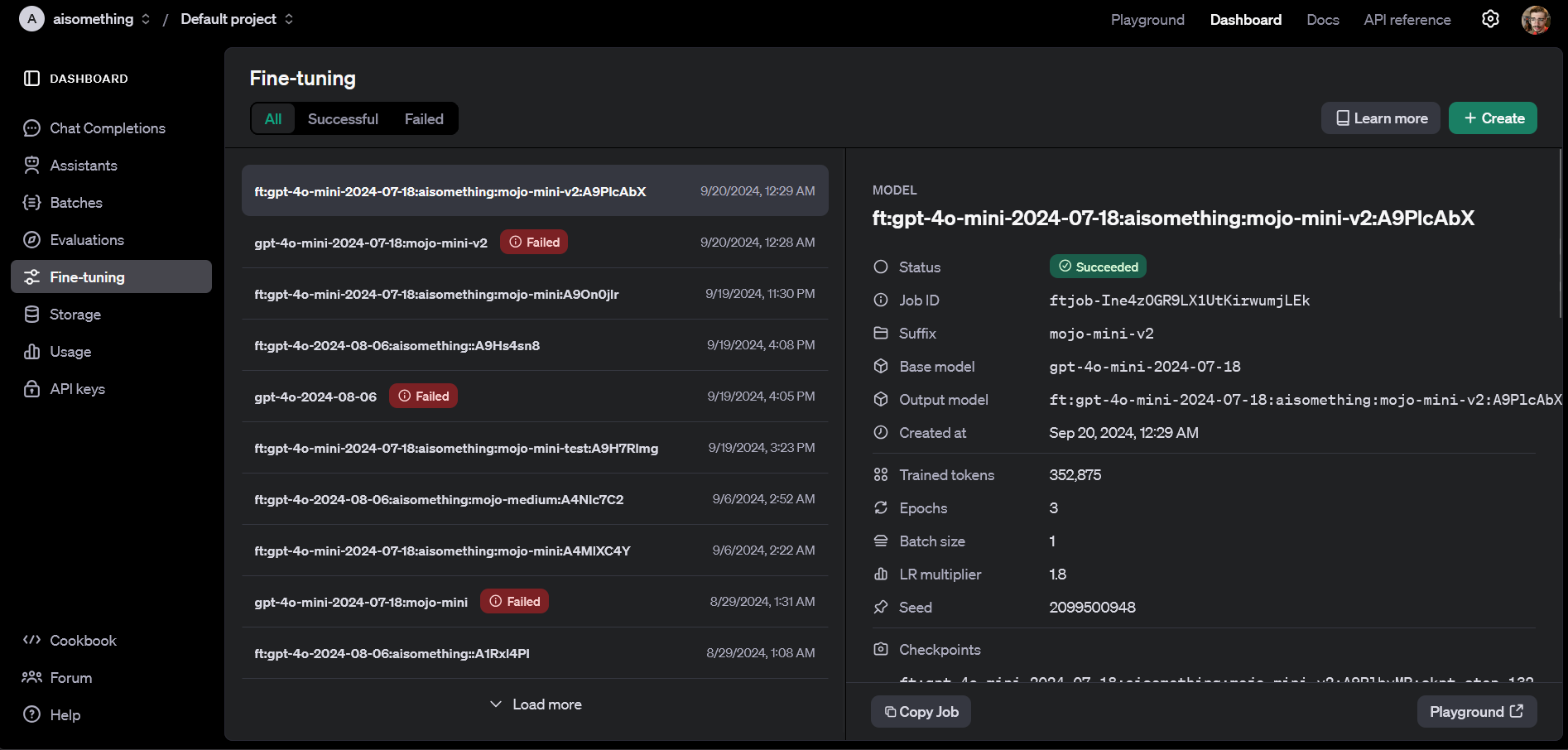

Given that this was my first foray into fine-tuning, I kept it simple and used OpenAI’s dashboard. I put ChatGPT to work again by providing it with Mojo’s documentation and asking it to generate training data.

After fine-tuning the model with this data and setting Cursor to use the resulting model, I gave Cursor the same prompt: “Write a Mojo script to output the sum of two values”:

This answer was an improvement, but I noticed one glaring issue with the generated code: the call to print(sum(3, 5)) wasn’t inside a main() function, so running this code would result in the following error:

The code was otherwise correct. Once I put print(sum(3, 5)) inside a main() function, the script ran and returned 8 as the sum of 3 and 5. I also wanted to test whether fine-tuned GPT4o’s knowledge of Mojo variable syntax was any better than Claude 3.5 Sonnet’s, so I gave Cursor another command: “Use variables.” In response, Cursor updated my script:

This syntax is correct! Overall, I’d grade Cursor and my fine-tuned GPT-4o a B when it comes to writing Mojo.

I also wanted to test whether regular GPT-4o was aware that let is no longer supported by Mojo. To do so, I switched over to the base model and asked Cursor to “write a Mojo script to output the sum of two values”:

This result was clearly worse than what fine-tuned GPT-4o generated: it reverted back to the outdated let syntax, added an unnecessary call to main() at the end, and overcomplicated the solution by creating the add() function as part of a struct. This gave me confidence that fine-tuning GPT4o using data generated from Mojo’s documentation had significantly improved the model’s Mojo skills.

My takeaways and next steps

Although GPT-4o wrote passable Mojo in Cursor after fine-tuning on the Mojo docs, I’m continuing to experiment with techniques to improve performance. For my latest iteration, I’ve created a JSON file containing all the Mojo-related resources I’ve been able to find on the web, including documentation, blog posts, code snippets, and tutorials. I wrote a script that feeds this file to GPT-4o and asks the model to generate a set of questions related to Mojo, acting as a user trying to learn Mojo. My script then uses OpenAI’s new o1-preview model to answer the questions generated by GPT-4o, using the resources in the JSON file. o1-preview seems to do a better job of reasoning through multiple steps and avoiding hallucination in its answers.

I plan to continue scaling this dataset, and then use the larger dataset to fine-tune GPT-4o again. I’ll hopefully use this fine-tuned model to create an even larger dataset of Mojo questions and answers. Finally, I’ll fine-tune an open source model like Llama 3 on my resulting Mojo dataset, unleashing the ultimate model to enable myself and other Mojicians to write better Mojo. This is just the beginning for my journey with Mojo and AI, and I can’t wait to see what the future holds.

Have you used AI to write Mojo? Did you discover any tips or tricks along the way? Any advice for me on my journey to create the ultimate Mojo model? Join the conversation in Modular’s Discord server.